Julia Busiek, UC Newsroom

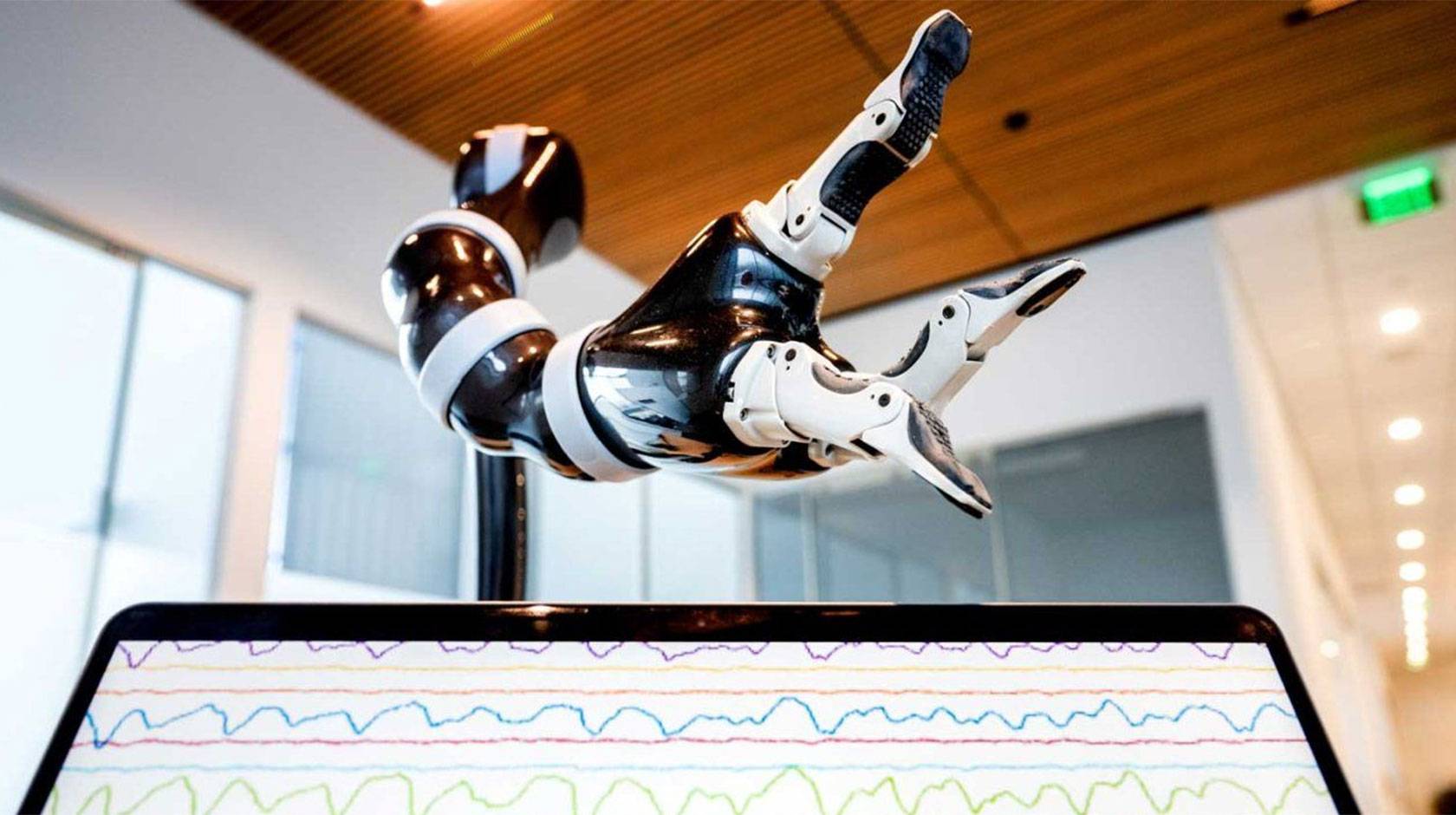

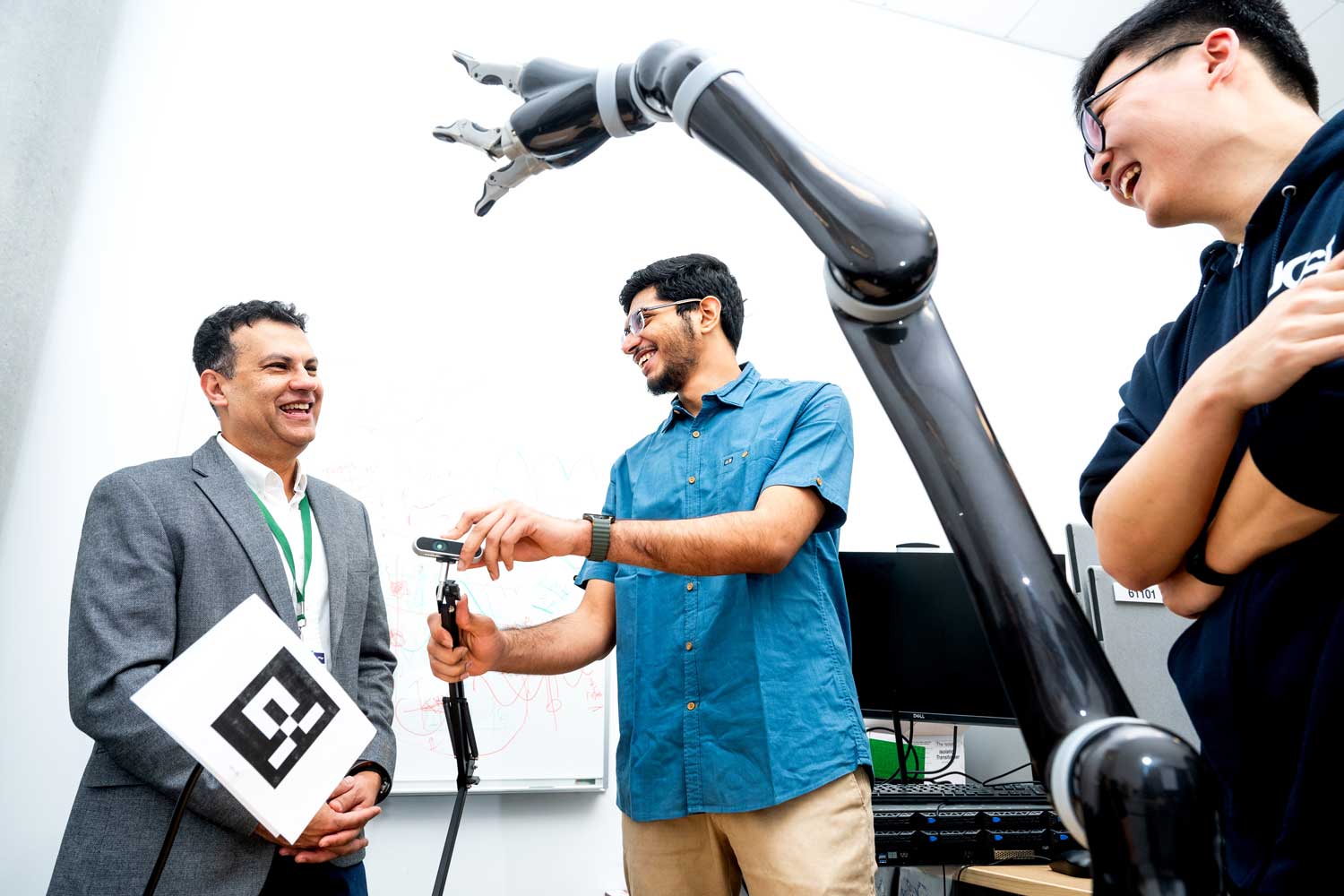

A man who hasn’t been able to move or speak for years imagines picking up a cup and filling it with water. In response to the man’s thoughts, a robotic arm mounted on his wheelchair glides forward, grabs the cup from a cabinet, and holds it up to a water fountain.

It sounds like something straight out of science fiction. But it’s just another day in the lab for UC San Francisco neurologist Karunesh Ganguly, one of many researchers across the University of California making progress in the brain-computer interfaces, aided by artificial intelligence.

At UC Davis, scientists have restored voice to a man silenced by ALS by translating his brain activity into expressive speech through a computer speaker. At UC Santa Barbara, neurologists are making bionic vision sharper and more useful. And the FDA recently approved a new treatment using personalized and adaptive deep brain stimulation to lessen symptoms of Parkinson’s disease developed by Simon Little, a neurologist from UC San Francisco.

“This blending of learning between humans and AI is the next phase for these brain-computer interfaces,” Ganguly said last year after the study published. “It’s what we need to achieve sophisticated, lifelike function.”

Paralyzed by a stroke, man learns to control a robotic arm with his thoughts

Much research in brain-computer interfaces so far has focused on decoding brainwaves in the motor cortex, the swath of neurons that arcs across the top of your brain like a headband and is the region most associated with coordinating your body's movement. Sensors attached to the scalp or surgically implanted in the motor cortex detect brainwaves when paralyzed patients are consciously trying to make a specific movement. Though their bodies do not actually move, an attached computer translates those signals into instructions to move a cursor, or more recently, a robotic limb.

“The next big challenge is how to make this useful to people day-to-day,” says Ganguly at UC San Francisco.

One problem Ganguly is trying to solve: The computer’s interpretation of a person's brainwaves actually gets less accurate over time. Nobody really knew why until Ganguly discovered the cause of this drift. The electrical patterns our brains generate when doing a certain task today are not the same patterns they’ll generate when doing the same task tomorrow.

He also discovered that these day-to-day variations are especially pronounced while people are learning how to control a new device, and that the shifts appear to take place overnight. That makes sense, considering what we know about the crucial role of sleep in helping our brains organize and integrate new information.

So Ganguly programmed an artificial intelligence to account for changes in participants’ brainwaves over time. Now, the system can accurately decode people’s intended movements, day after day, week after week, with no need to recalibrate. Ganguly is now refining the AI models to make the robotic arm move faster and more smoothly, and planning to test the system in a home environment.

Restoring real-time communication to people with paralysis

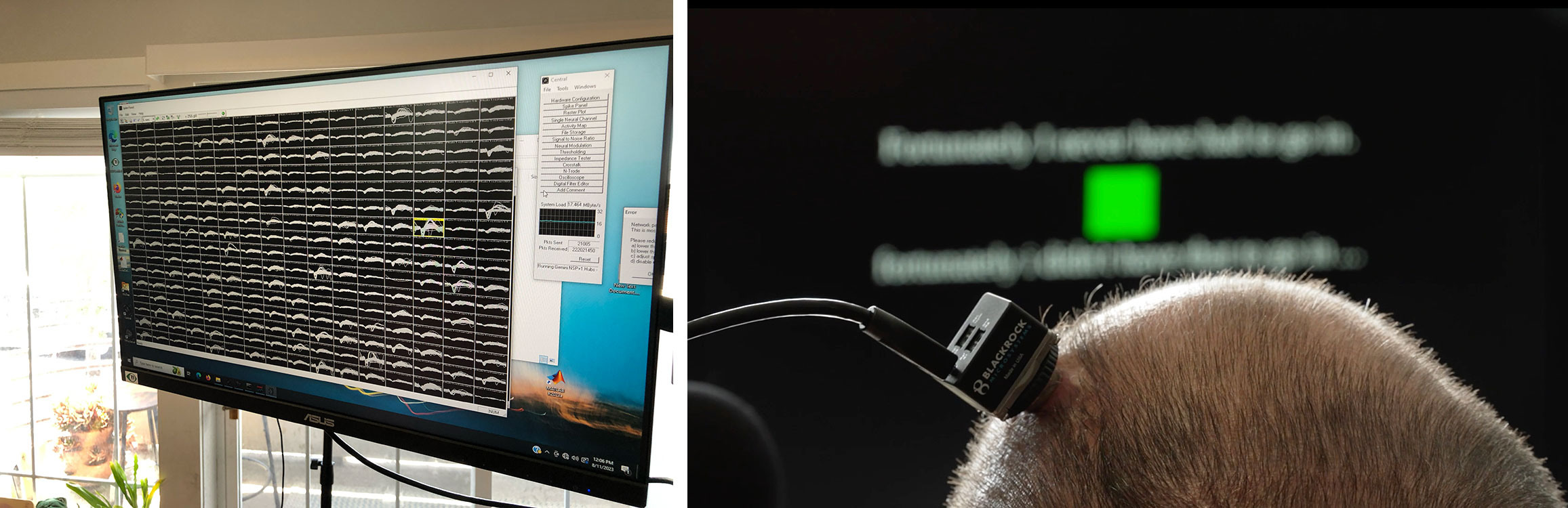

Thanks to twenty years of research in brain-computer interfaces, people who’ve lost the ability to speak can now control computer cursors through sensors implanted in the part of their brains that control hand movement. That has made it possible to type messages that appear on a screen — itself a tremendous and hard-won victory for people who’d previously lost any ability to communicate, says Tyler Singer-Clark, a Ph.D. student in biomedical engineering at UC Davis.

And yet, compared to conversational speech, typing words one letter at a time is a relatively cumbersome way to communicate, Singer-Clark says. “You can imagine how much more efficient and intuitive a brain-computer interface that’s actually designed to translate speech would be. Instead of having to type out letters on a keyboard one by one, you could just say what you want to say.”

That’s the goal of the UC Davis Neuroprosthetics Lab, led by Sergey Stavisky and David Brandman, where Singer-Clark is a researcher. Instead of asking participants to imagine moving their hand in a particular direction, then decoding their brain activity into instructions to move a cursor, researchers ask the participant to try to speak words on a screen in front of them.

“If we have the neural activity while participants are attempting to move their mouth and say words we’ve asked them to say, then we can train a computer to decode those movements and words,” Singer-Clark says. “From then on the computer can perform tasks, or say words, on the person’s behalf.”

Working with lab scientists is a man who has been paralyzed by a progressive neurological disease. He has sensors implanted in the part of his brain that’s responsible for speech, instead of the area that’s responsible for moving the hand. In June, Neuroprosthetics Lab project scientist Maitreyee Wairagkar published a study as part of the BrainGate2 clinical trial demonstrating that their technology can instantaneously translate brain activity into “speech” from a computer speaker.

“The brain-computer interface also emulated the cadence of the participant’s intended speech, effectively creating a digital vocal track,” Wairagkar says. “This advance has restored the man’s ability to chat with his family, change his intonation and even ‘sing’ melodies.”

In a related study published this summer, Singer-Clark found that the participant in the speech study could use this same system to move a computer cursor in addition to generating speech, even though the sensors were not placed in his hand motor cortex.

“You get multiple functions for someone with paralysis to gain more independence in life. Now they can send emails, send texts, watch TV, make a PowerPoint presentation, and have a real-time conversation,” Singer-Clark says. “Users don’t have to choose between speech or computer control.”

UC San Francisco neurologist Simon Little developed a new, more effective approach to treating Parkinson's symptoms.

Adaptive treatment keeps Parkinson’s symptoms at bay

At UC San Francisco, neurologist Simon Little is using brain-computer interfaces and AI to improve a longstanding treatment for Parkinson’s disease. Known as deep brain stimulation, this treatment involves implanting a device in patient’s brain that can deliver precise electrical pulses. These pulses override the malfunctioning brainwaves that cause Parkinson’s symptoms like weakness or tremors.

Until recently, the stimulation patients received was constant, even as patient’s brain activity and their resulting symptoms constantly changed. “You'd meet with your neurologist in a clinic, we’d program the stimulator, and then you'd be on that stimulation for the next few months,” Little says. “So sometimes your symptoms would be undertreated, and sometimes you’d get too much stimulation.”

The approach devised by Little and his colleagues uses the implants that deliver electrical stimulation to also record brainwave patterns associated with symptoms to personalize therapy. This personalized approach was approved by the FDA earlier this year.

Now, Little is collaborating with UC San Francisco neurosurgeon Philip Starr to use artificial intelligence to recognize and treat brainwave patterns associated with too much medication. This approach reduced patients’ most bothersome motor symptoms by 50 percent. Now the team is applying a similar method to treat sleep, depression and anxiety in people with neurological disorders.

Michael Beyeler directs the UC Santa Barbara Bionic Vision Lab.

Bionic vision, aided by AI

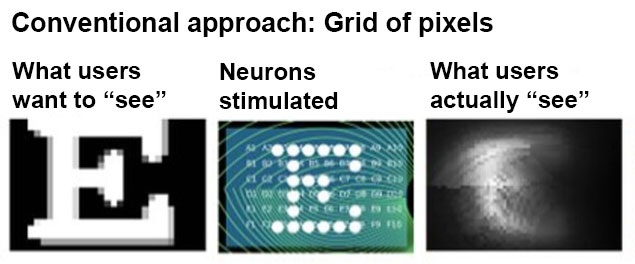

“Over 40 million people worldwide live with incurable blindness,” says UC Santa Barbara professor Michael Beyeler. Most of these cases are caused by hereditary conditions that damage photoreceptors, the cells in our eyes that perceive light and translate it into brainwaves. Beyeler, who leads the UC Santa Barbara Bionic Vision Lab, aims to restore a rudimentary form of sight to people who've lost it.

Bionic vision has been around for a few years. It works via an implant in the back of the eye, which uses electricity to stimulate the nerve cells that deliver incoming information from photoreceptors to the brain for processing. In users with vision loss, this electrical stimulation aims to replace the signals that damaged photoreceptors would otherwise send.

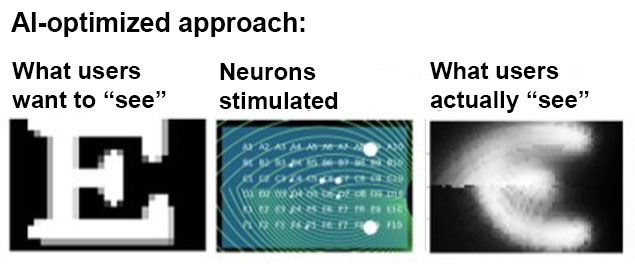

The problem is, the image the user’s mind ends up “seeing" — the so-called percept — isn’t sharp enough to be of much use in daily life. Part of the problem, Beyeler found, is that existing systems assume that our photoreceptors work like a grid of pixels: that if you want someone to perceive a letter E, for instance, you just need to stimulate an E-shaped area of neurons behind their eye.

“We studied the neurobiology of the eye and discovered that retinal neurons are actually wired in complex ways and the brain interprets their signals nonlinearly,” Beyeler says. Just stimulating these cells in a simple pixel-like pattern can produce smudged or distorted percepts.

Using artificial intelligence, Beyeler is mapping out which cells to stimulate to generate a percept that is recognizable as the letter E — though the shape of the neurons that get stimulated may actually look nothing like an E. In other words, rather than fight against the eye’s physiology, his lab is discovering how to work with it.

“Our main contribution has been to better understand how this transformation happens from electrical signals developed by an implant to a perceptual experience of what the people actually see,” Beyeler says.

From lab to clinic, powered by government funding

One thing each of these efforts have in common is support and funding from federal agencies like the National Institutes of Health and the National Science Foundation. These are the same agencies that are facing deep funding cuts in next year’s Congressional budget.

“We’re more than twenty years into progress in brain-computer interfaces, and these technologies are just finally getting to the point where industry is ready to take it up,” says Ganguly. For instance, Neuralink, a company co-founded a decade ago by Elon Musk, has garnered big private sector investment to fund its development of a cursor-control system for people with paralysis.

“What's being commercialized now is the thing that academics, with government funding, have been working on for two decades,” Ganguly says. “The science behind speech and robotic movement control, these more sophisticated challenges we’re working on at UC now, are still in their infancy. The only way we’ll keep making progress is if the government keeps investing in these experimental studies.”

CAUTION - Investigational Device. Limited by federal (or United States) law to investigational use.