David Danelski, UC Riverside

In an era where manipulated videos can spread disinformation, bully people, and incite harm, UC Riverside researchers have created a powerful new system to expose these fakes.

Amit Roy-Chowdhury, a professor of electrical and computer engineering, and doctoral candidate Rohit Kundu, both from UCR’s Marlan and Rosemary Bourns College of Engineering, teamed up with Google scientists to develop an artificial intelligence model that detects video tampering — even when manipulations go far beyond face swaps and altered speech. (Roy-Chowdhury is also the co-director of the UC Riverside Artificial Intelligence Research and Education (RAISE) Institute, a new interdisciplinary research center at UCR.)

Rohit Kundu and Amit Roy-Chowdhury

Their new system, called the Universal Network for Identifying Tampered and synthEtic videos (UNITE), detects forgeries by examining not just faces but full video frames, including backgrounds and motion patterns. This analysis makes it one of the first tools capable of identifying synthetic or doctored videos that do not rely on facial content.

“Deepfakes have evolved,” Kundu said. “They’re not just about face swaps anymore. People are now creating entirely fake videos — from faces to backgrounds — using powerful generative models. Our system is built to catch all of that.”

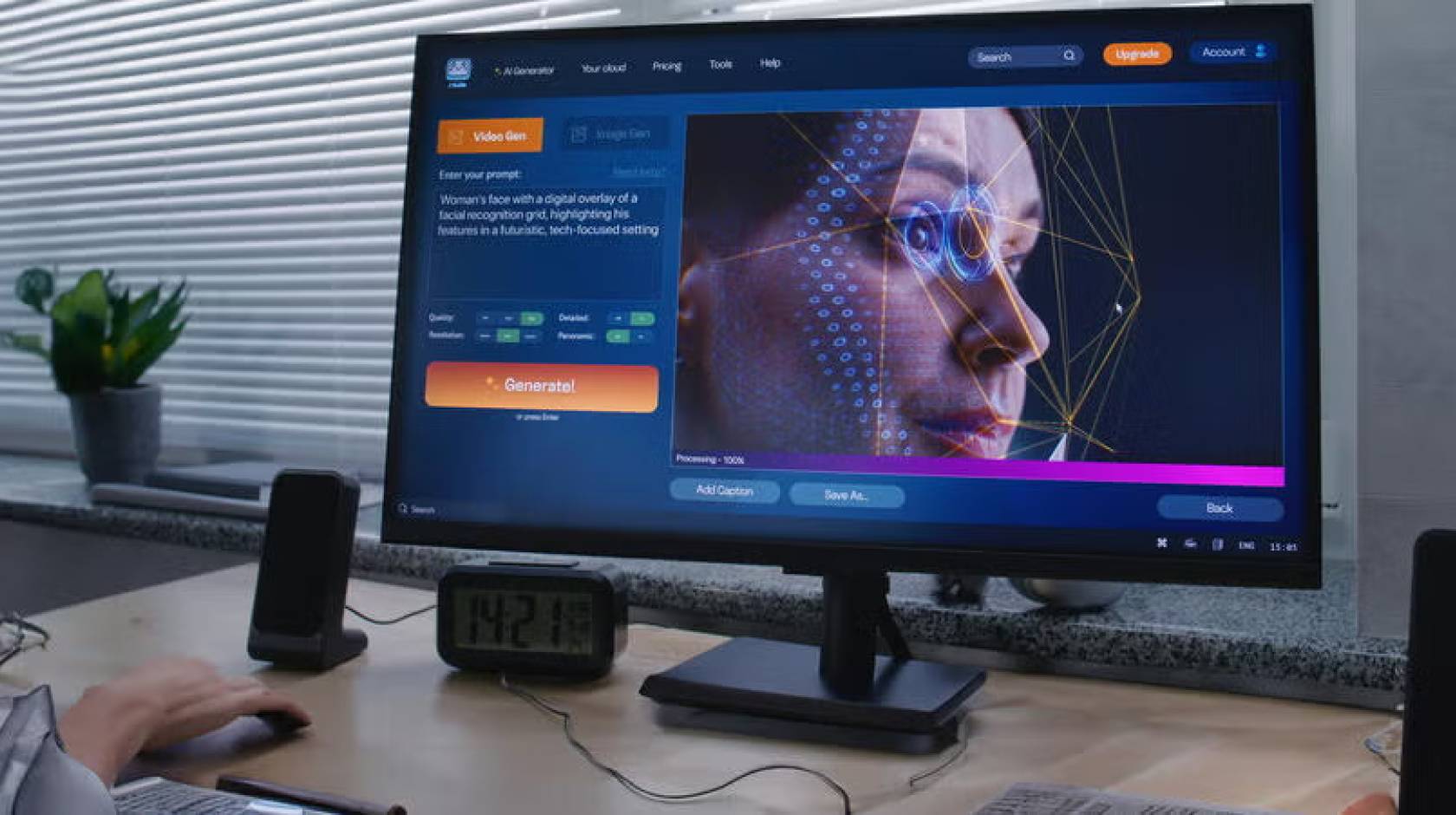

UNITE’s development comes as text-to-video and image-to-video generation have become widely available online. These AI platforms enable virtually anyone to fabricate highly convincing videos, posing serious risks to individuals, institutions, and democracy itself.

“It’s scary how accessible these tools have become,” Kundu said. “Anyone with moderate skills can bypass safety filters and generate realistic videos of public figures saying things they never said.”

Kundu explained that earlier deepfake detectors focused almost entirely on face cues.

“If there’s no face in the frame, many detectors simply don’t work,” he said. “But disinformation can come in many forms. Altering a scene’s background can distort the truth just as easily.”

To address this, UNITE uses a transformer-based deep learning model to analyze video clips. It detects subtle spatial and temporal inconsistencies — cues often missed by previous systems. The model draws on a foundational AI framework known as SigLIP, which extracts features not bound to a specific person or object. A novel training method, dubbed “attention-diversity loss,” prompts the system to monitor multiple visual regions in each frame, preventing it from focusing solely on faces.

The result is a universal detector capable of flagging a range of forgeries — from simple facial swaps to complex, fully synthetic videos generated without any real footage.

“It’s one model that handles all these scenarios,” Kundu said. “That’s what makes it universal.”

The researchers presented their findings at the high ranking 2025 Conference on Computer Vision and Pattern Recognition (CVPR) in Nashville, Tenn. Titled “Towards a Universal Synthetic Video Detector: From Face or Background Manipulations to Fully AI-Generated Content,” their paper, led by Kundu, outlines UNITE’s architecture and training methodology. Co-authors include Google researchers Hao Xiong, Vishal Mohanty, and Athula Balachandra. Co-sponsored by the IEEE Computer Society and the Computer Vision Foundation, CVPR is among the highest-impact scientific publication venues in the world.

The collaboration with Google, where Kundu interned, provided access to expansive datasets and computing resources needed to train the model on a broad range of synthetic content, including videos generated from text or still images — formats that often stump existing detectors.

Though still in development, UNITE could soon play a vital role in defending against video disinformation. Potential users include social media platforms, fact-checkers, and newsrooms working to prevent manipulated videos from going viral.

“People deserve to know whether what they’re seeing is real,” Kundu said. “And as AI gets better at faking reality, we have to get better at revealing the truth.”